Google Sitemaps: A Useful SEO Tool?

Like many webmasters, when Google Sitemaps was released on 2 June 2005, I immediately created and submitted XML sitemaps for all my websites, keeping my fingers crossed that Googlebot wouldn’t find any errors when he finally downloaded them. At the time, Shiva Shivakumar posted to the Official Google Blog:

<

Webmasters expected big things from this experiment. Site owners hoped this would finally mean perfectly crawled, complete indexes of their websites that were regularly updated and would increase their rankings. Most were disappointed and decided to abandon Google Sitemaps almost immediately.

One year since its launch, the interface has changed two or three times and new features have gradually appeared. For any webmasters who ditched Google Sitemaps early on, here’s a quick summary of why there’s possibly more to Google Sitemaps than just getting your site indexed. (For some unknown reason, not all these statistics are available for all websites. It’s probably also worth noting that you can view most of this information by simply adding your website to Google Sitemaps without actually submitting a sitemap.)

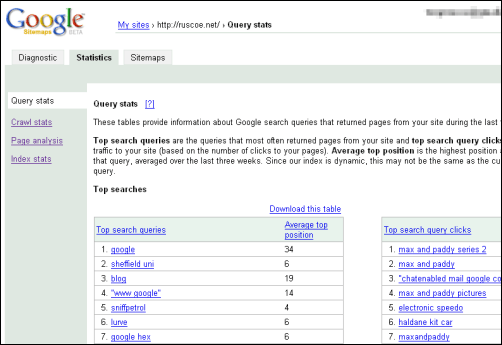

Query stats: Perhaps the most interesting of the statistics provided, these tables show which searches performed over the last three weeks returned pages from your site and what the highest average position was. The top search query clicks table lists which queries resulted in click-throughs to your site (and this should return similar results to any analytics software that shows keyword conversion, such as Google Analytics) and the top search queries table shows which search queries returned your pages in the results where visitors didn’t click through to your pages.

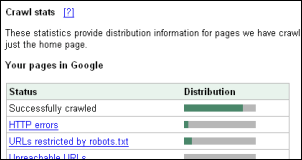

Crawl stats: As well displaying breakdowns for the crawl status of your URLs and how many pages have a high, medium or low PageRank, this section also lists which page on your website has had the highest PageRank for the last three months. For sites with many pages, this can obviously be much quicker than checking each individual PageRank with the Google Toolbar or other tool.

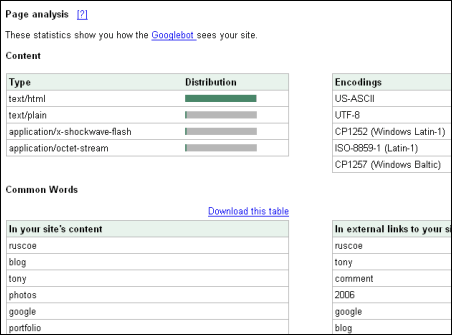

Page analysis: The Content section simply shows the content type and encoding distribution of your pages, but the Common Words section is more interesting, displaying which words most commonly appear on your website and which words are the most common in external links to your site. (Unfortunately, both tables only list words rather than phrases but this should still give you a good idea of why people are finding you and how others are linking to you.)

How could any of this be useful to a webmaster?

- If you’re seeing your key phrases in the top search queries but not in the top search query clicks, you should probably be paying more attention to increasing your rankings for the phrases that aren’t getting the clicks - especially if your average top position is low. If your key phrases don’t appear in either of these lists, you should be reconsidering whether users are actually likely to search for these phrases in the first place. Using tools like Google Trends and the Overture Keyword Selector Tool, you should be able to quickly see which terms could be more popular.

- When optimizing your site for specific key phrases, you could use Crawl stats to identify which page has the highest PageRank and make sure your key phrases appear on that page as they should then carry more weighting in search results.

- If the words appearing in the common words lists aren’t the same as your target keywords, you should probably be re-writing some of your content. And if you’re paranoid about why people are linking to your website, check the common words appearing in external links for any Googlebombs! (If the webmaster for www.whitehouse.gov uses Google Sitemaps he would undoubtedly see “failure” as the most common word appearing in external links.)

Google Sitemaps was created by Google to help them improve their index, in the hope that webmasters would take some of the strain out of crawling the web. Did Google always plan to add the statistics discussed here? I doubt it. I think it’s more likely that they realised webmasters expected something in return for helping Google to improve their index, especially since their indexing and rankings didn’t appear to be improved by submitting sitemaps.

In conclusion, is Google Sitemaps useful or just interesting? Do you use Google Sitemaps? If so, what do you use it for? What do you think the future holds for Google Sitemaps? Has it failed miserably, or succeed beyond their wildest dreams?

<< Home